This guest post is authored by Tim Gellner, a senior consultant with the manufacturing IT group at MAVERICK Technologies, a Rockwell Automation company.

Is overall equipment effectiveness (OEE) a “magic metric” or just another flavor of the month measurement? The answer is: it can be either, but not both. We have become quite good at producing production metrics that are boiled down to a value that is either green, yellow or red as compared to an arbitrary goal or world class standard and that is as far as we go. The result is alternating periods of contentment, concern and panic. When it’s green we pay no attention to it, when yellow we hope that it will be green again soon, so we tweak something and watch it periodically. When it slips to red we scramble and then write reports. Before long the efforts to keep us out of the red compound the original problem and we struggle to get back to where we were before we had the green, yellow and red metric. At this point the program becomes relegated to “flavor of the month” and it is abandoned. For OEE to become the “magic metric” there are some empirical truths that must be ingrained in the organization at the outset of the program definition.

OEE is a continuous improvement program and therefore requires a sustained commitment to produce results. A successful implementation requires an overarching vision for the program and comprehensive support from management (top down) as well as understanding, knowledge and realization of benefits from the plant floor (bottom up). The meeting ground is the data analysis and improvement methodology (we are all on the same team with a common goal). It is imperative that those responsible for the OEE numbers see the program as both a method for analysis and a means for improvement, not another number to have to defend or explain.

Implementing an OEE program is as much about people and processes as it is about technology. Ignoring the contribution by the first two components will lead to an inevitable failure regardless of the sophistication of the third. Conversely if the technology is cumbersome and difficult to use, the knowledge and enthusiasm of the people will be lost in their attempts to interact with the technology (hardware and software) and the OEE program is doomed.

Standardize everything, get everyone to agree, and stick to it. When you stop laughing consider this: Even if the program will only encompass a single machine in a single facility you must still define and document what you are measuring, how you will measure it, and how you will analyze the resulting data. Now consider the case where the program will be rolled out to multiple facilities, with multiple lines and machines. If the OEE metric is be to meaningful (i.e. facilitates “apples to apples” analysis), then the following items must be standardized across the enterprise:

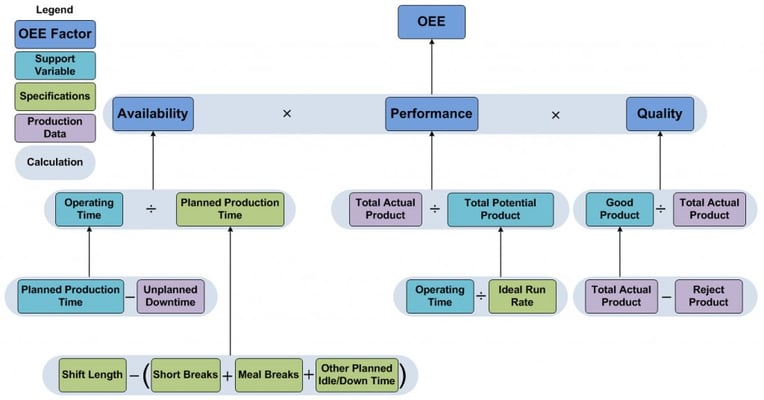

- OEE Calculation Model − The basic calculation is straight forward. OEE = Availability x Performance x Quality. The variance occurs in the lower levels of the calculation. The OEE calculation model diagram below represents the calculation model agreed upon by a company in the initial phase of a multi-line, multi-facility OEE program roll out.

- OEE Factor Definitions − The terms in each block of the diagram must be fully defined as they relate to the production processes within the organization that are to be included in the program.

- Downtime Reasons − While downtime reasons are not required for the calculation of OEE, it is advantageous to assign reasons which provide meaningful context to the event/duration data. Ideally, in an automated system the reasons for unplanned stops can be automatically assigned, are reliable, can readily adhere to standards, and are generally not available. We usually have to rely on downtime reason assignment done manually by operators. To be effective we must ensure that the reason assignment process is quick and easy; to this end we must present a list of reasons that is sufficiently broad to provide assignable explanations for both planned and unplanned downtime events and at the same time is narrow enough so that it is not overwhelming.

- Methods for Data Analysis − The data that is derived from manufacturing processes contain both natural variability and signals. The key to meaningful data analysis is to filter out the natural variability and leave the signals. Understanding and acting on the signals in the data is the key to improvement, which is the goal of any OEE program. Far too often, we rush to produce slick, glitzy charts that while they look great, they do not support meaningful analysis and often become the basis for the alternating periods of contentment, concern and panic as they encapsulate the variability and the signals into a description of the data, not an analysis of the data. To avoid this pitfall, I turn to Shewart’s Process Behavior charts.

Measuring and analyzing OEE has been proven to be a very powerful tool for improving manufacturing processes. Getting more out of existing equipment goes straight to the bottom line. R. Hansen’s book, Overall Equipment Effectiveness, noted: “A 10% improvement in OEE can result in a 50% improvement in ROA (return on assets), with OEE initiatives generally ten times more cost-effective than purchasing additional equipment.” Given this incentive, why not take advantage of this opportunity and employ thoughtful and standard methods to make OEE the magic metric.

About the Author R. Tim Gellner has more than 20 years of experience in discrete and continuous manufacturing processes, manufacturing intelligence and process improvement. Tim advocates continuous improvement through the use of actionable and timely information. He earned a bachelor‘s degree in systems and control engineering from the University of West Florida in Pensacola, Fla.

R. Tim Gellner has more than 20 years of experience in discrete and continuous manufacturing processes, manufacturing intelligence and process improvement. Tim advocates continuous improvement through the use of actionable and timely information. He earned a bachelor‘s degree in systems and control engineering from the University of West Florida in Pensacola, Fla.

Contact with Tim: