The following discussion is part of an occasional series showcasing the ISA Mentor Program, authored by Greg McMillan, industry consultant, author of numerous process control books, 2010 ISA Life Achievement Award recipient, and retired Senior Fellow from Solutia, Inc. (now Eastman Chemical). Greg will be posting questions and responses from the ISA Mentor Program, with contributions from program participants.

Marsha Wisely is ISA Mentor Program protegee with 8 years of experience in the automation and simulation industry, working in the chemical and life sciences industries.

Marsha Wisely’s Question

In my personal experience working with users in qualified industries—specifically the Life Sciences industry—they often have a development system that is used for building/testing code changes, and then a qualification system that gets only the approved changes for qualification before implementation on the production system. How can I leverage simulation to support qualification and reduce downtime as part of management of change (MOC) and capital projects?

Simulation Benefits to Testing

Benefits of having a process simulation to test changes prior to startup are all centered around risk reduction, cost savings, and time savings. The benefits of completing testing before it would become part of the critical path for start-up include:

- More batches can be run in a safe environment

- Operations code is fully challenged before it is in operation

- Costly material consumption can be minimized with the use of virtual runs versus real runs

- Operator recovery from upsets and failures can be practiced safely

- Testing can happen before equipment is installed or available

- Unexpected delays are minimized as necessary changes are found earlier and fewer expensive late changes would occur during startup

- Production time can be optimized which can be a significant impact in sold out market conditions and with tight delivery deadlines

- Qualification steps that do not require physical equipment can be completed before startup

Examples of some of the development tests that can be completed in the offline environment: Version upgrades, hotfixes, testing connections to third parties or manufacturing execution system (MES) platforms, verification that code changes have the desired effect, engineering studies to improve production efficiency, and so on.

Development Systems

Development systems on the other hand tend not to be qualified systems. These are often the sandbox for changes before going to the qualified system. All of the above listed benefits to testing can be achieved in this environment, with the exception of starting qualification. For that, we need a qualified system.

Qualified Simulation Systems

Some of the qualification activities are only related to the control system code and can, in theory, be executed prior to startup against a simulated process. Changes to a qualified system all have to go through the qualification process, so having simulation on a qualified system means that your I/O and process models also need to be qualified. This requires some additional effort but depending on how critical uptime is in your facility, the production time saved may well be worth the time and effort.

The other challenge is that there are additional stakeholders in a qualified system. Depending on your qualification team, they may or may not accept the tests described above as valid for qualification. If the tests need to be repeated on the production system because of their specific qualification requirements, having a qualified simulation system makes significantly less sense.

The main challenge with any offline simulation system is keeping the production and offline development system in sync. This, however, can be mitigated by a clear MOC process where the development system is updated first and then pushed to production. Not only does this ensure that your code is tested offline first, it also ensures that the development system never falls behind and continues to be a useful asset to the facility.

Summary

Testing against simulation is going to save time during startup because problems are found earlier, so changes that need to be made have minimum impact on production. With a development system process simulation, fewer changes are made directly in production because the change is fully tested and confirmed against the virtual process, addressing problems prior to making any changes in production. With a qualification system process simulation, some of the qualification tasks can be performed in advance of startup further shortening downtime, but this requires buy-in from the qualification team that these tests are valid.

Greg McMillan’s Answer

In order to verify that tuning and performance is sufficient, the simulation must include the dynamics of the process and the automation system, which includes any lags of sensors and cycle times of analyzers and transportation delays of manipulated flows. To ensure the control strategies are correctly designed and configured, process interactions must be included that generally require dynamic first-principle process models.

Recent breakthroughs in bioreactor modeling that employ generic, easy parameterized kinetics offer the opportunity to start with a dynamic first-principle process model of low to medium fidelity that can progress to high fidelity as parameters are improved to offer opportunities for reducing process variability and increasing yield. The Michaelis-Menten equation that has the Monad equation for the limitation effect multiplied by an additional equation for the inhibition effect has shown to be useful for modeling extracellular and intracellular kinetics for nearly all substrate component concentrations. Convenient cardinal equations are offered that are incredibly easier to parameterize to capture the nonsymmetrical extreme effects of pH and temperature on cell growth rate and product formation rate.

An automated fitting tool provides the means to estimate model parameters for kinetics, agitation, and sparge from key measurement profiles (eg, turbidity for cell mass, dielectric constant for viable cell mass, oxygen uptake rate, fast protein liquid chromatograph for product concentration, pH, and temperature) and flow rates. Supporting automation blocks offer the opportunity for viable cell and product concentration profile control and endpoint prediction.

The technology and opportunities are highlighted in the Control article, “Bioreactor control breakthroughs: Biopharma industry turns to advanced measurements, simulation and modeling,” and detailed in the ISA book New Directions in Bioprocess Modeling and Control Second Edition.

The digital twin with the actual control system configuration including all batch, PID, and model predictive control (MPC) with the actual operator interface offers many advantages for a greater understanding of the interrelationships in the process and ways to maximize batch repeatability and product concentration. This is best done early in the research and development phase using a miniaturized version of the actual control system in both the laboratory and pilot plant that will be used in production. The ability to upload the R&D configuration and download it into a digital twin and production plant facilitates exploration, innovation, implementation, and verification.

If the digital twin controllers have the same setpoints and tuning as the actual plant, the fidelity in terms of the process model parameters and installed flow characteristics is seen in how well the manipulated variables in the digital twin match those in the actual plant. Mismatch in the transient response to disturbances and setpoint changes is frequently caused by missing or incorrect automation system dynamics in the digital twin.

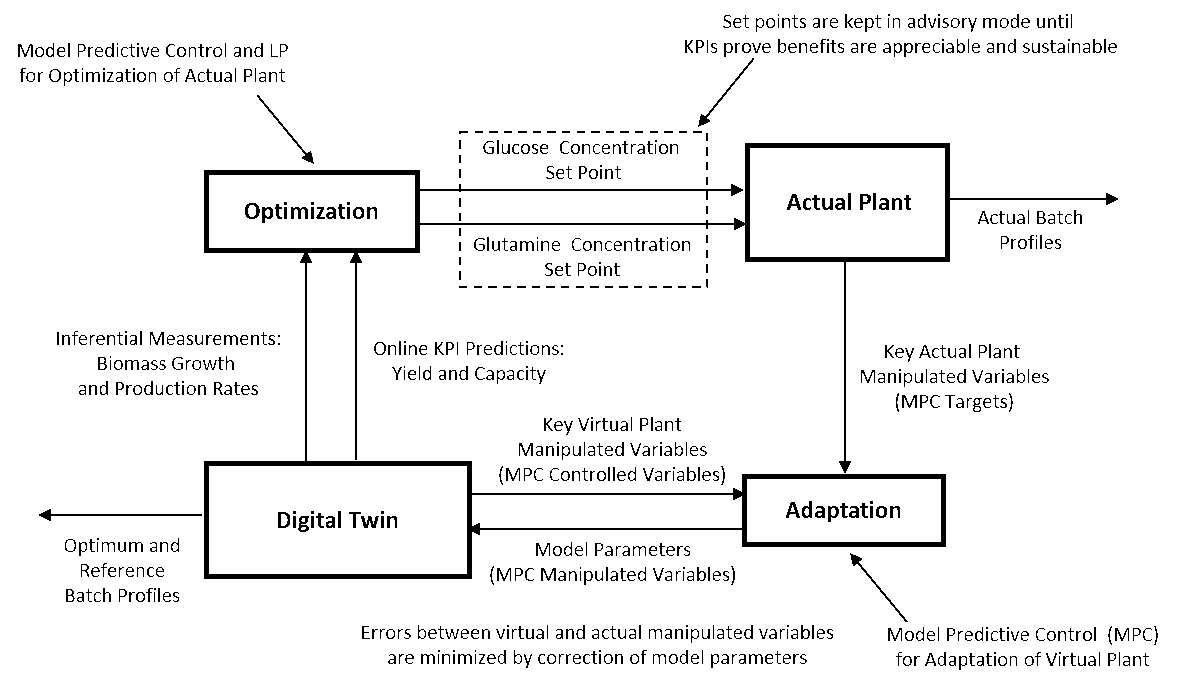

Figure 1: Nonintrusive automated adaptation of model parameters to match manipulated variables with potential future optimization based on improvement in KPIs

Model predictive controllers (MPCs) can be set up to non-intrusively adapt a digital twin model. The MPC models are identified by running the digital twin separately from the actual plant testing the response of the control system manipulated variables to step changes made in key model parameters. Once the MPCs for adapting the model are ready, the digital twin model is synchronized with the actual plant and is run real-time. The actual plant’s manipulated variables become the targets and the digital twin manipulated variables become the controlled variables of the MPCs. The manipulated variables of the MPCs are key model parameters. Once the models are adapted, inferential measurements are potentially available for process control. For a bioreactor, these could be cell growth rate and product formation rate for profile optimization as shown in Figure 1 by the manipulation of glucose and glutamine concentration setpoints for fed batch control. This optimization can be done by additional MPC whose models can also be developed offline in the digital twin. Key performance indicators (KPIs) are used to verify the performance of the MPC. The optimized setpoints for the actual control system are kept in the advisory mode until the KPIs prove the benefits as appreciable and sustainable.

More general and extensive optimization techniques have been developed. The Heuristic Random Optimizer (HRO) provides both parameter estimation and optimization. The article, “Dynamic Optimization of Hybridoma Growth in a Fed-Batch Bioreactor” (2000, John Wiley &Sons) shows how HRO was used to improve the first-principle model and optimize the production of monoclonal antibodies. The model uses modified Monad equation kinetics.