This post was authored by Roy Tomalino, professional services engineer at Beamex.

Modern transmitters often are promoted as being smart and extremely accurate. Sometimes you hear they don’t need to be calibrated because they are so “smart.” So why would you calibrate them? The output protocol of a transmitter does not change the fundamental need for calibration.

There are numerous reasons to calibrate instruments initially and periodically. A short summary of the main reasons include:

- Even the best instruments and sensors drift over time, especially when used in demanding process conditions.

- Regulatory requirements, such as quality systems, safety systems, environmental systems, standards, etc.

- Economic reasons – any measurement having direct financial impact.

- Safety for employees as well as customers or patients.

- To achieve high and consistent product quality and to optimize processes.

- Environmental requirements.

Before we jump into calibration of smart instruments, one important feature of a smart transmitter is that it can be configured via a digital protocol. Configuration of a smart transmitter refers to the setting of the transmitter parameters. These parameters may include engineering unit and sensor type. The configuration needs to be done via the communication protocol. So in order to do the configuration, you will need to use some form of configuration device − typically called a communicator − to support the selected protocol. It is crucial to remember that although a communicator can be used for configuration, it is not a reference standard and therefore cannot be used for metrological calibration.

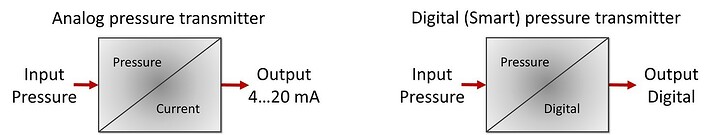

A simplified principle diagram of a conventional analog and digital smart pressure transmitters.

A simplified principle diagram of a conventional analog and digital smart pressure transmitters.

Configuring the parameters of a smart transmitter with a communicator is not in itself a metrological calibration (although it may be part of an adjustment/trim task) and it does not assure accuracy. For a real metrological calibration, by definition a traceable reference standard (calibrator) is always needed. According to international standards, calibration is a comparison of the device under test against a traceable reference instrument (calibrator), and documenting the comparison. Although the calibration formally does not include any adjustments, potential adjustments are often included when the calibration process is performed. If the calibration is done with a documenting calibrator, it will automatically document the calibration results. To calibrate a conventional, analog transmitter, you can generate or measure the transmitter input and at the same time measure the transmitter output. In this case calibration is quite easy and straightforward.

You need a dual-function calibrator able to process transmitter input and output at the same time, or alternatively two separate single-function calibrators. But how can a smart transmitter, with output being a digital protocol signal, be calibrated? Obviously the transmitter input still needs to be generated/measured the same way as with a conventional transmitter, i.e. by using a calibrator. However, to see what the transmitter output is, you will need some device or software able to read and interpret the digital protocol. The calibration may be a very challenging task. Several types of devices may be needed and several people to do the job. Sometimes it is very difficult or even impossible to find a suitable device, especially a mobile one, which can read the digital output. Wired HART (as opposed to WirelessHART) is a hybrid protocol that includes digital communication superimposed on a conventional analog 4-20mA output signal.

The 4-20mA output signal of a wired HART transmitter is calibrated the same way as a conventional transmitter. However, to do any configuration or trimming, or to read the digital output signal (if it is used), a HART communicator is needed. Smart instruments do need to be configured and calibrated, as do traditional analog instruments. However, the calibration method is a bit different than traditional analog instrumentation. It requires a device that can interpret digital protocols and the best equipment to perform both configuration and calibration would be a high accuracy, multifunctional documenting calibrator and communicator. Using this type of device eliminates the need for carrying more than one piece of equipment into the field, and you can configure instruments and calibrate them.

Blog Posts

How Often Do Measurements Need to Be Calibrated?

Just in Time, or Just Too Late? A Kaizen Approach to Calibration

How to Improve Industrial Productivity with Loop Calibration

Temperature Calibration: Using a Dry Block to Calculate Total Uncertainty

How Can Advanced Calibration Strategies Improve Control Performance?

How to Calibrate a Pressure Transmitter

Webinar Recordings

Uncertainty in Calibration

Calibration Uncertainty and Why Technicians Need to Understand It

How to Avoid the Most Common Mistakes in Field Calibration

Learn Advanced Techniques in Field Calibration

How to Build an Industrial Calibration System Business Case

How to Use Calibration Tools for Accurate Process Temperature Measurement

How Does Low Flow Affect Differential Pressure Flowmeter Calibration?

Three Common Pitfalls of Pressure Calibration

How to Calibrate Differential Pressure Flowmeters

Free Downloads

ISA Industrial Calibration Worksheets

Measurement Uncertainty Analysis Excel template plus book excerpt

Calibration Handbook of Measuring Instruments book excerpt

In-Depth Guide to Calibration for the Process Industries eBook

Calibration Uncertainty for Non-Mathematicians white paper

About the Author

Roy Tomalino, a professional services engineer at Beamex, has been teaching calibration management for 13 years. He conducts educational training sessions and provides technical support for Beamex customers. Roy earned a bachelor’s degree in computer information systems from Regis University, Denver, Colo. and an associate of applied science degree in electronics technology from the Denver Institute of Technology. Roy also is Six Sigma Green Belt certified.